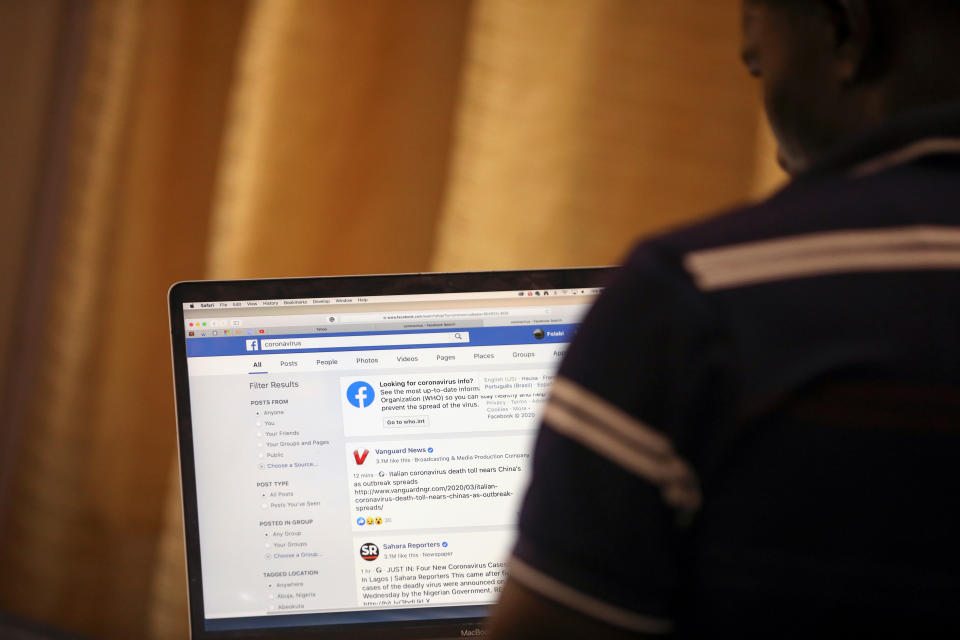

Facebook is 'the 800-pound gorilla in the misinformation market'

Facebook (FB) recently announced that it would be banning advertisements that discourage vaccines and banning content related to rabid QAnon conspiracy theories.

But according to Imran Ahmed, the CEO of the Center for Countering Digital Hate, it’s too little too late — especially considering that the platform won’t touch existing anti-vaccine posts.

“Facebook, they are the 800-pound gorilla in the misinformation market,” Ahmed said on Yahoo Finance’s The First Trade (video above). “That’s the truth. … Facebook is the company that can change the lens through which we see the world by repeated misinformation being spread to people. It can actually persuade people the world is a different way.”

It’s not just about vaccine misinformation or QAnon, Ahmed added.

“We saw it with coronavirus and people believing that masks were dangerous or that masks weren’t necessary,” he said. “We see it with identity-based hate, which is what my organization typically looks at. In fact, it’s Facebook that has the biggest problem and has done the least, ironically, to deal with that problem.”

Facebook did not respond to a request for comment.

‘Concerted misinformation actors ... growing rapidly’

After the 2016 election season, during which foreign actors exploited Facebook’s weak content moderation policy, Facebook developed a “fact-checking” system that has been used to label videos and posts it deems to be factually inaccurate.

Ahmed argued that the company’s moderation response has not been enough.

“What they’ve done essentially is they’ve looked at a very, very dirty apartment and they plumped up the cushions and not cleaned anything else,” he said.

For example, research by the Center for Countering Digital Hate found that there are tens of millions of followers of “individuals, of groups, of pages” that are spreading anti-vaccine misinformation.

“38 million we found just on Facebook across the U.K. and the U.S., and that’s been growing rapidly, so the organic reach by concerted misinformation actors has been growing rapidly,” Ahmed said. “In fact, dealing with the ads problem — it was small in terms of numbers but it was extraordinary that there was any point at which Facebook had a business that was based on taking adverts from misinformation actors and spreading misinformation about vaccines into millions of news feeds.”

Other platforms have taken steps to prevent misinformation: Twitter increasingly labels what it deems to be factually inaccurate content, most notably from the 87 million-follower account of Trump.

“Twitter is, in technical terms, we would say that it’s more about the tactical adjustment of what’s on the agenda,” Ahmed said.

‘Misinformation is being fed to you’

Overall, algorithms primarily incentivized toward engagement have made the information space worse.

“There’s very little that individual users can do because they are being placed into a platform in which information and misinformation flow unabated, intermingling without an easy way to discern between the two,” he said. “Misinformation, because of course it’s controversial, it’s chewy, it’s what people get engaged in, it’s actually advantaged on the platform because it’s more engaging and what we see on platforms now isn’t a literal timeline. It is an algorithmic and artificially generated list of the most engaging content.”

The more people engage in a post, the more likely others will see it and also engage, boosting the popularity. Once an algorithm figures out what a user is interested in, it’s more likely to deliver similar content each time the user returns.

“That’s great for keeping people on platforms,” Ahmed said. “It’s great for winning the attention economy in an evening when you think to yourself ‘What should I do? Should I spend half an hour trying to figure out what to watch on Netflix and then choosing nothing, which is what most of my evenings feel like? Or is it to go on Facebook and see what my chums are up to?’ That’s what you end up doing.”

In that space, he said, “misinformation is being fed to you. Even today, you can find anti-scientific information being dispersed on those platforms as adverts. Someone can pay to, for example, dissuade you about the science of climate change, can pay to dissuade you about even now certain types of vaccines, about coronavirus information. These are very dangerous platforms and we’ve known this for some time.”

Adriana Belmonte is a reporter and editor covering politics and health care policy for Yahoo Finance. You can follow her on Twitter @adrianambells.

READ MORE:

'A victim of their own success': How vaccines became a casualty of misinformation in the U.S.

QAnon: Why rabid pro-Trump conspiracy theories keep gaining steam

Coronavirus vaccine doesn't depend on President Trump's rhetoric, doctor explains

Read the latest financial and business news from Yahoo Finance

Follow Yahoo Finance on Twitter, Facebook, Instagram, Flipboard, LinkedIn, YouTube, and reddit.